Recap on Residual Networks

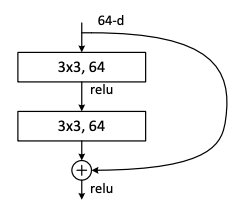

Linking the paper right here,Deep Residual Learning for Image Recognition, the main idea behind the paper is taking an earlier layer and then skipping one or more layers, then adding it to the output of the stacked layers that have been skipped. The connection is refered to as shortcut connection. Let's now see how this goes programmatically using one of the fundamental residual block diagrams.

This block has input having 64 dimensions (64 channels). From the paper, this is from the first group of residual blocks known as conv2_x for the 18-layer ResNet in Table 1. Since we are focused in this block for now, I won't implement the surrounding blocks until later on. Understanding the output as being 56 x 56, then backtracking to what input dimensions should be knowing that the stride is 1 for each of the two layers which are Conv2d layers with 64 filters and kernels sized 3 and padding 1, we have the same dimensions maintained for the input to the residual block, 56x56.

The input to the residual block is therefore dimensions [1, 64, 56, 56], taking note of PyTorch's way of ordering dimensions AS [N, C, H, W]

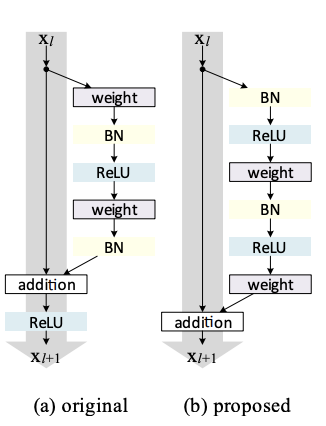

Next, let's create the stack of convolution layers. Taking note of a statement from the paper, "We adopt batch normalization (BN) right after each convolution and before activation", we then implement nn.BatchNorm2d into the stack where the parameter is just the number of output channels from the previous layer.

I've used the above for simplicity of understanding. But the recommended way to implement this will be shown once we start dealing with repeating blocks and the groups of repeating blocks, where we build custom class inheriting from base Class nn.Module.

This stack can be clearly understood from the comparison of the residual blocks from the ResNetv2 paper, where the left one below is the one we're building currently and the right is the one proposed for use in the ResNetv2 paper by Kaiming He et.al., as can be seen below

This stack can be clearly understood from the comparison of the residual blocks from the ResNetv2 paper, where the left one below is the one we're building currently and the right is the one proposed for use in the ResNetv2 paper by Kaiming He et.al., as can be seen below

Now onto the skip connection. We know that whatever is at the output of the skip connection is just the input but skipping over the stack of layers.

Taking the output of the skip connection, and then adding it to the output of the stack of convolut layers, the resulting output is then programmatically achieved as

Taking the output of the skip connection, and then adding it to the output of the stack of convolut layers, the resulting output is then programmatically achieved as

~ recommended implementation

Let's use the recommended custom class way, inheriting from base class nn.Module which we will therefore expand for the dashed shortcuts, which I'll explain, and for the repeating blocks.

Let's use the recommended custom class way, inheriting from base class nn.Module which we will therefore expand for the dashed shortcuts, which I'll explain, and for the repeating blocks.