A tensor

It's been great having to have dealt with all the 1-D concepts in algebra and the 2-D functions in vector calculus, and now here we are, diving into the domain of high-dimensional mathematical computations. And this is how we start, from scalar beginnings. And the best is yet to come.

From a physicist, what we will be building can be considered as function approximators. From an engineering perspective, they're more analogous to controllers and adaptive filters. For mathematicians, they're universal approximators, derived from the universal approximation theorem.

Generally, we will consider the tools as likelihood estimators.

From a physicist, what we will be building can be considered as function approximators. From an engineering perspective, they're more analogous to controllers and adaptive filters. For mathematicians, they're universal approximators, derived from the universal approximation theorem.

Generally, we will consider the tools as likelihood estimators.

so what is a tensor

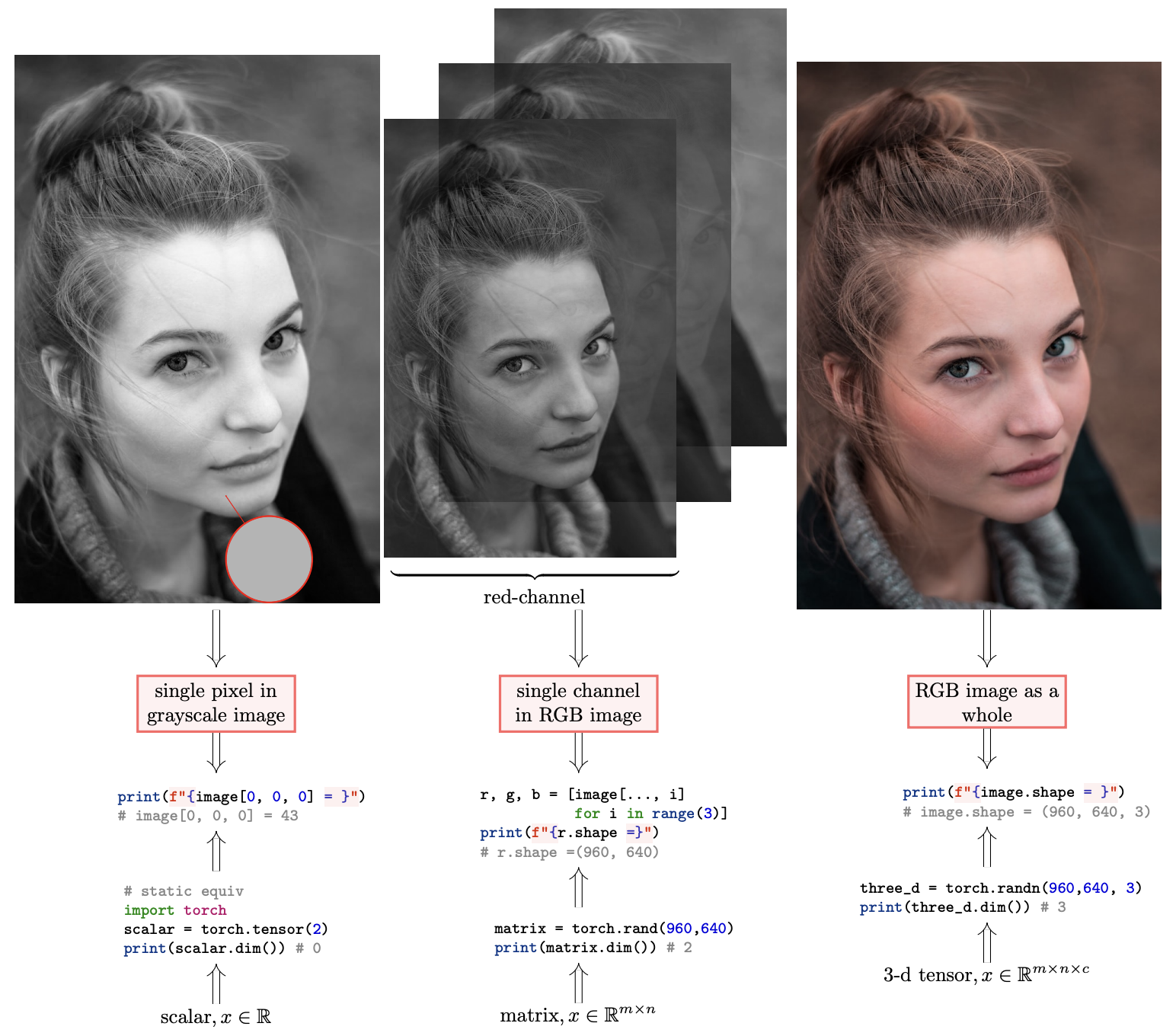

A tensor is a generalization of the concept of a scalar, vector, matrix and so forth. It is basically an n-dimensional data representation. A rank-0 tensor is a scalar, rank-1 tensor a vector, rank-2 tensor a matrix.

Simply put, a rank is basically the number of dimensions.

Let's see this better from ~

Photo by ~Almos Bechtoldon ~Unsplash

Let's see this better from ~

from the illustrations above, vector, not really visualized, is the row or column in the grayscale of the image, defined by dimension 1.

Tensor networks are a natural way to parameterize interesting and powerful machine learning models.

Tensor networks are a natural way to parameterize interesting and powerful machine learning models.

Why PyTorch...

PyTorch is so well optimized for deep learning for GPUs and CPUs with automatic differentiation and compiler tools for hardware stack likeTensorRT-LLMandtorch/ao.It is also very flexible with pure Pythonic code and interoperable to other languages like in a standalone C++ program via TorchScript compiling and JS viatransformersjs.

PyTorch therefore provides features forIf you are more curious, wanting to understand the internals of PyTorch, this blog really entails the building of it and can be fun to recreate

Internals of Pytorch

PyTorch therefore provides features for

🦾creating n-dimensional Tensors, take it like numpy on steroids

🚀automatic differentiation for building and training neural networks

Internals of Pytorch

It's now time to playing with gradients in the next section, delving into differentiation, persisting of computations in graphs and what goes on in the backwards call.