By Marvin Desmond

Posted 7th June 2023

Neuron, the (to-be) plugin for Flutter

The inspiration for this app

I've always had a heart for Machine Learning in Production, and smooth deployment in apps was of core focus among other production pipelines. I have a heart for flexibility of tools and cross-compatibility among frameworks. That is why when I got to browse the available plugins for, I wanted more than what I got.

tflite_flutter

This is apluginby the TensorFlow team for Flutter. However, the documentation is pretty much limited as it only goes into the inference of a [1, 5] float32 input. It does not show any preprocessing or postprocessing tools related to a Machine Learning pipeline. Like, is there a non-max suppression function for a model whose outputs are raw bounding boxes?... How about a model whose preprocessing is not embedded within the model layers? The application I have built (from which the new plugin for Flutter, Neuron, will be based on), goes into processing for classification, detection (already implemented), and will go over to allow for text, audio and others.

pytorch_mobile

This,the most liked Flutter plugin for Pytorch, only allows a preconfigured location for models and labels. This implies you have to embed the model before you build the app. Post-processing functions also lack within this library.

For both of these models, it constrains one to the inference part of model deployment and monitoring. There's also no tests for various Machine Learning tasks, or any way to do so. There's also no active community support with regards to more support and that's also something to change with this new plugin-to-be.

But of main concern, I wanted a plugin to support Tensorflow and Pytorch model preprocessing, inference, and postprocessing irrespective, that is cross-compatibility.

But of main concern, I wanted a plugin to support Tensorflow and Pytorch model preprocessing, inference, and postprocessing irrespective, that is cross-compatibility.

I'm happy to be corrected on the above libraries' details, but I assure you, Neuron will be a very awesome addition to the list of plugins on ML.

The inception

As with every other foreseen projects I have yet to build or the minor ones done before, there's accompanying tools and apps I build to support and test the core platforms.

Before the app was built, which foresees the plugin, I had to understand the official mobile documentation for Machine (and Deep) Learning, for Android.

This involves API references, demo applications, and integrations such as using Google Play Services.

For TensorFlow, I scoured through theguideand theAPI reference. For a start, I had to understand the very essentials, hence not using any abstracted classifier and detection methods within the documentation. A part of the docs with the most help was thispage, taking me through every stage of the custom inference pipeline for Android using theTensorFlow Lite Support Library, as opposed to the more abstractedTask Library

For PyTorch, I had to start with the officialdemo android blog. Also, to understand the core methods used in the inference pipeline, I had to go through theJava native docsfor Pytorch. From here, now we can have a good start.

Before the app was built, which foresees the plugin, I had to understand the official mobile documentation for Machine (and Deep) Learning, for Android.

This involves API references, demo applications, and integrations such as using Google Play Services.

For TensorFlow, I scoured through theguideand theAPI reference. For a start, I had to understand the very essentials, hence not using any abstracted classifier and detection methods within the documentation. A part of the docs with the most help was thispage, taking me through every stage of the custom inference pipeline for Android using theTensorFlow Lite Support Library, as opposed to the more abstractedTask Library

For PyTorch, I had to start with the officialdemo android blog. Also, to understand the core methods used in the inference pipeline, I had to go through theJava native docsfor Pytorch. From here, now we can have a good start.

Flutter and the UI thread

The app had these controls in mind:~ A storage directory folder that hosts the locally downloaded models for offline pre-processing, inference and post-processing capabilities. Also, permissions to access, modify and delete files in the storage directory, in my case, the /0/storage/emulated/Android/data/com.example.flutter_native/files/

~ This was to ensure for internet access, requested models for inference not yet downloaded would be from the Git releases, and if no internet is accessible, to notify on of the same and issue a request to use an already downloaded model.

Another scenario for this lies in the checkFileComplete function, where in the presence of internet, ensures that a model file, apart from it in itself existing locally, ensures that it's fully downloaded. If not, that is on no internet, ascertain that the file exists and hope for the best in assuming the model file is complete.

~ model access is just the urls pointing to the Git releases in therepositoryfor the test models for neuron. Dio is then used to downloaded files to local with the rendering to the UI bound using a function callback across widgets. ~ In the inference UI, I had to ensure the right data is shared across widgets, and only the right state of that data at any given time for either a classification or a detection task (with more tasks to be added later).

~ From the concept of flutter plugins, and Java interops, MethodChannel was essential as the platform to communicate with the native codebase for the plugin to be implemented, as the core of the toolkit is in Java.

🧬file access control

🧬network access control

🧬models access git lfs

🧬inference ui

🧬flutter methodchannels

~ This was to ensure for internet access, requested models for inference not yet downloaded would be from the Git releases, and if no internet is accessible, to notify on of the same and issue a request to use an already downloaded model.

Another scenario for this lies in the checkFileComplete function, where in the presence of internet, ensures that a model file, apart from it in itself existing locally, ensures that it's fully downloaded. If not, that is on no internet, ascertain that the file exists and hope for the best in assuming the model file is complete.

~ model access is just the urls pointing to the Git releases in therepositoryfor the test models for neuron. Dio is then used to downloaded files to local with the rendering to the UI bound using a function callback across widgets. ~ In the inference UI, I had to ensure the right data is shared across widgets, and only the right state of that data at any given time for either a classification or a detection task (with more tasks to be added later).

~ From the concept of flutter plugins, and Java interops, MethodChannel was essential as the platform to communicate with the native codebase for the plugin to be implemented, as the core of the toolkit is in Java.

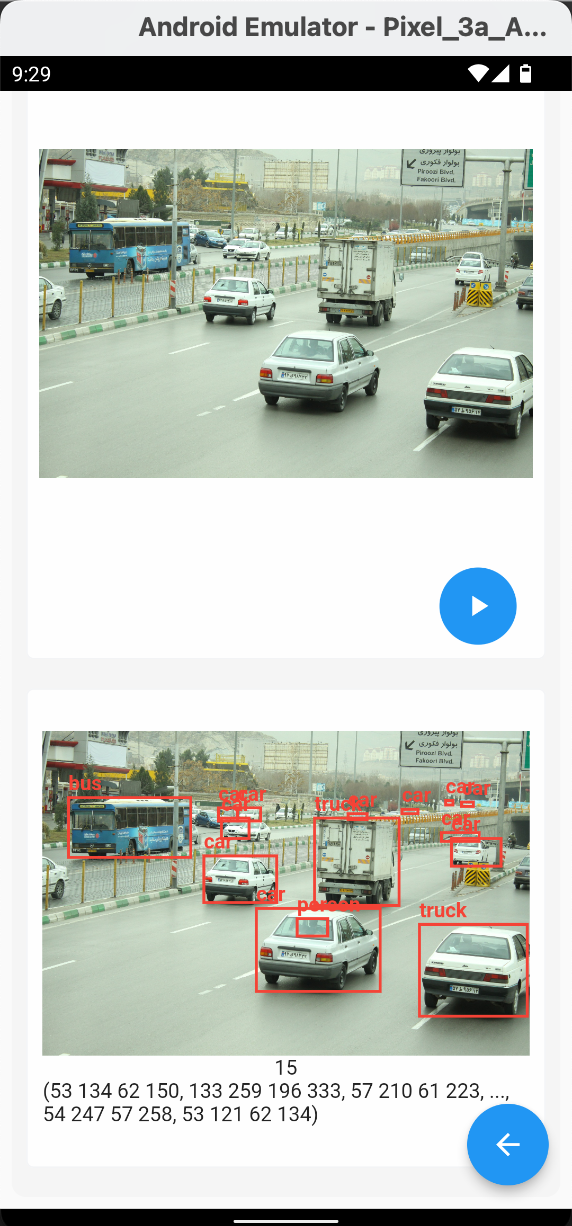

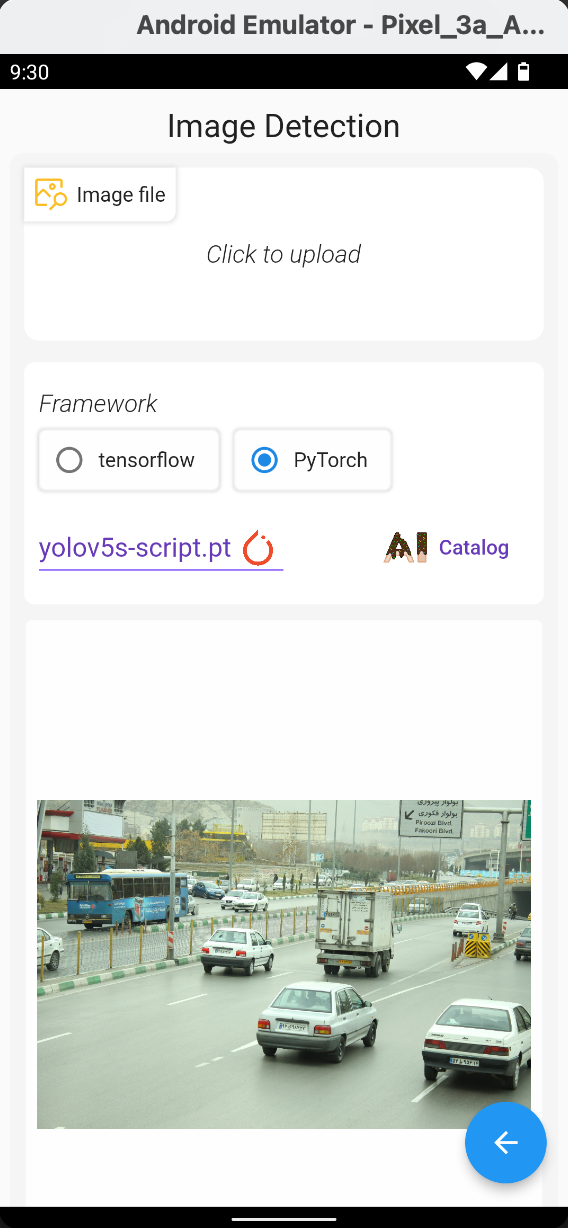

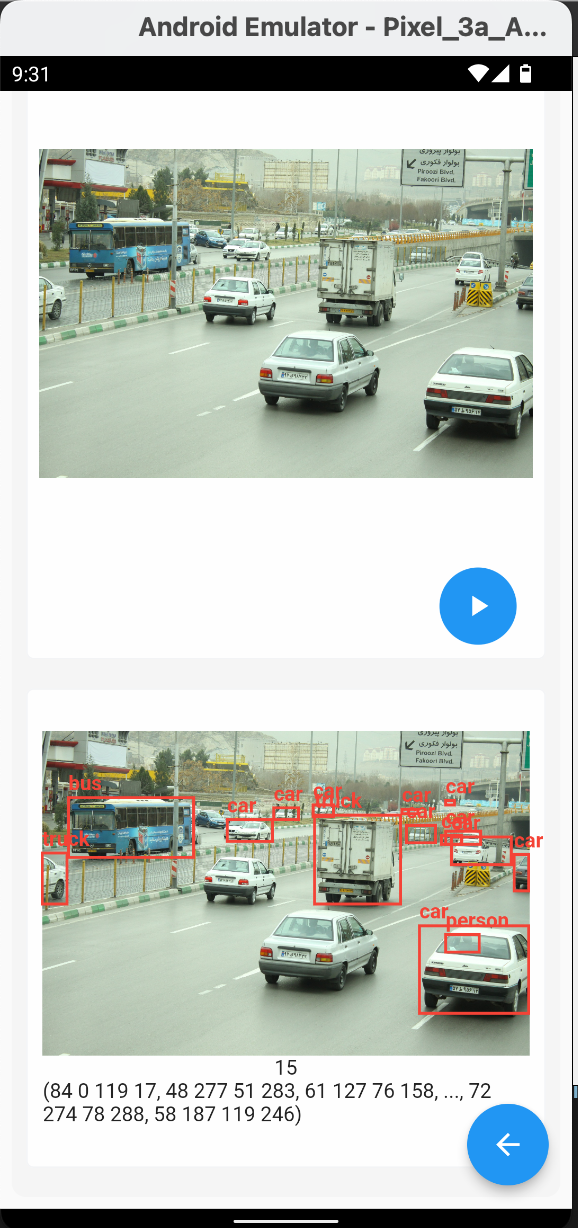

Screenshots of the app

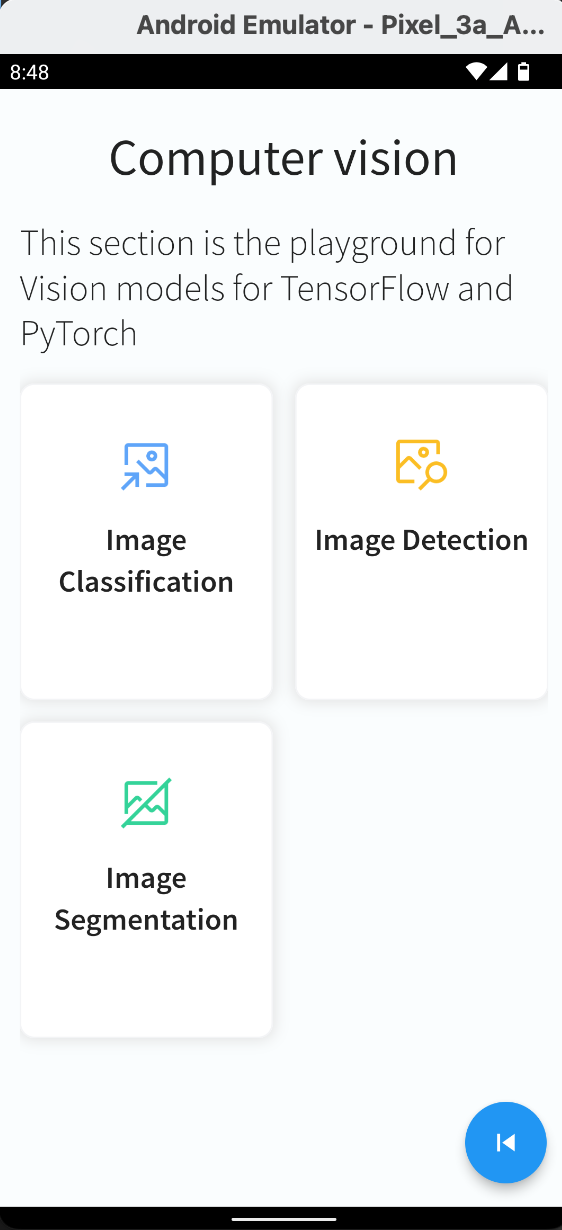

The app, in its root, has model cards for tasks such as classification, detection, segmentation, and more to come.

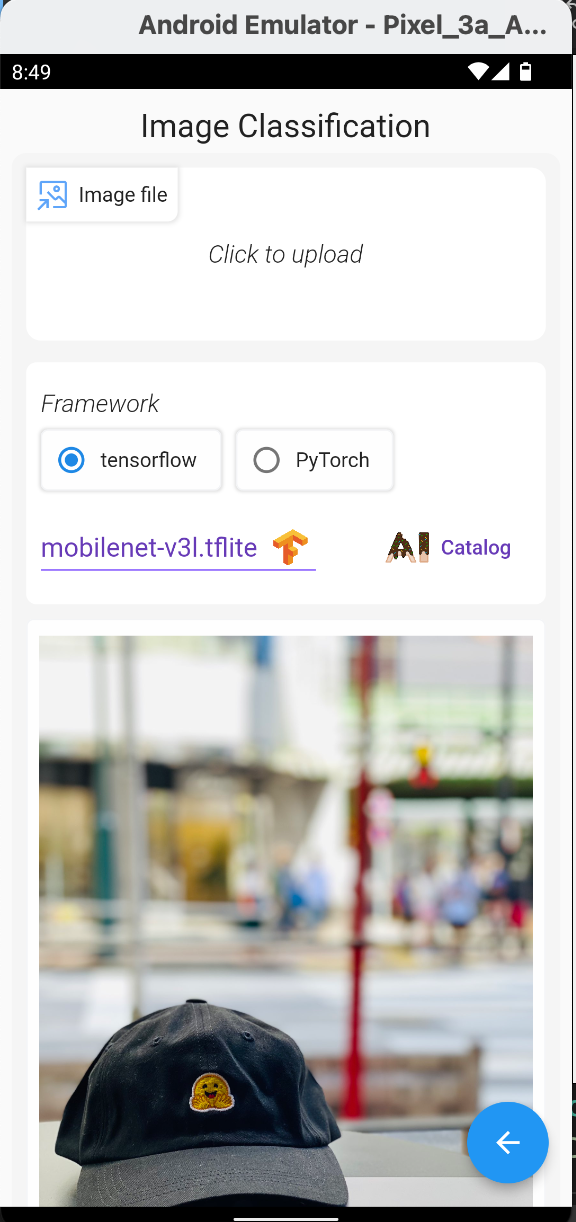

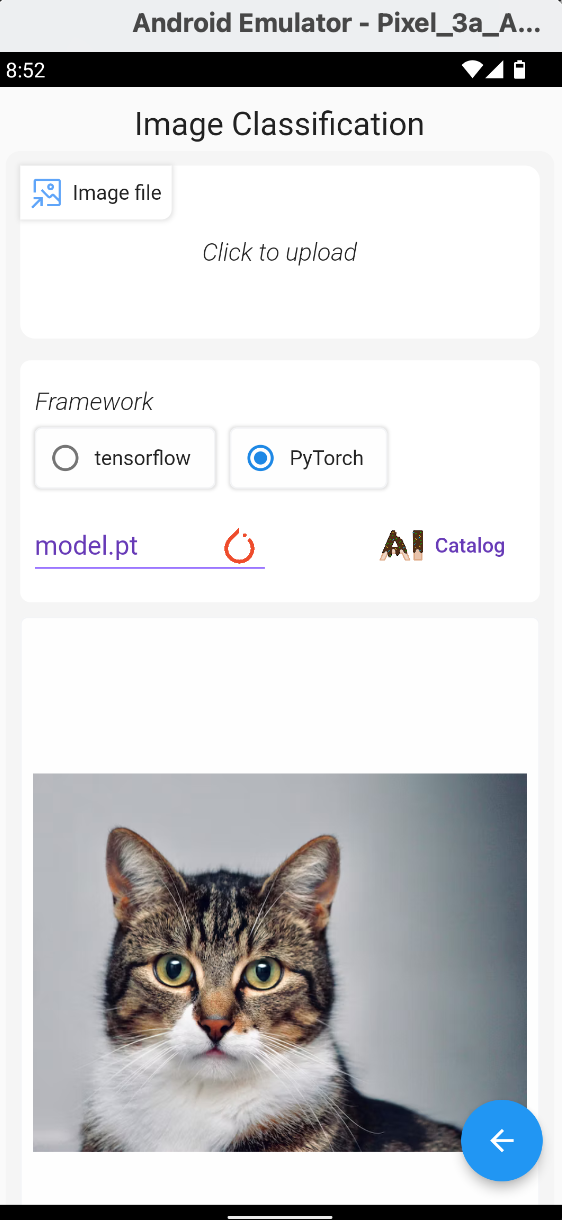

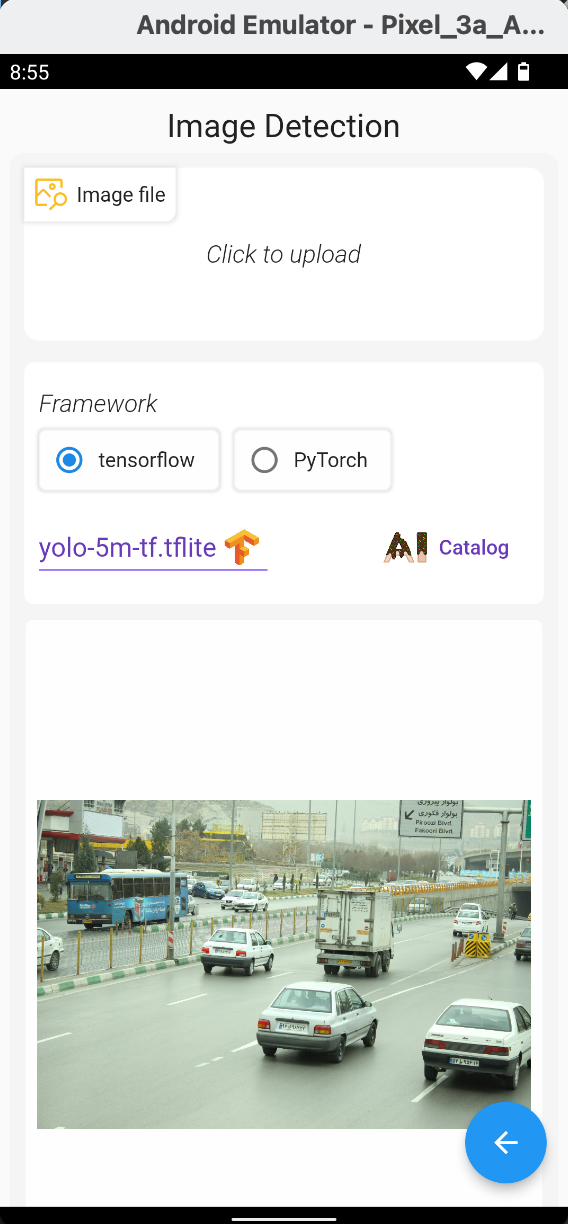

Clicking on each card opens a model playground that, depending on a tag parameter, loads the models for TensorFlow and PyTorch, in the app in separate dropdowns for the sake of UI interactivity.

It has the picture upload, right now loading from gallery, model upload, that when a model is explicitly selected in the dropdowns, loads from cloud if not found, and then initializes an instance of the model using communication via the MethodChannel to the Java codebase, or if found, directly initializes the instance.

There's the picture render, which renders the image uploaded and shows a default one if null, one by @hardmaru.

"So cool to meet up with AK and the Gradio gang in Shibuya. Got myself some nice" ~@hardmaru@HuggingFace swag

It has the picture upload, right now loading from gallery, model upload, that when a model is explicitly selected in the dropdowns, loads from cloud if not found, and then initializes an instance of the model using communication via the MethodChannel to the Java codebase, or if found, directly initializes the instance.

There's the picture render, which renders the image uploaded and shows a default one if null, one by @hardmaru.

"So cool to meet up with AK and the Gradio gang in Shibuya. Got myself some nice" ~@hardmaru@HuggingFace swag

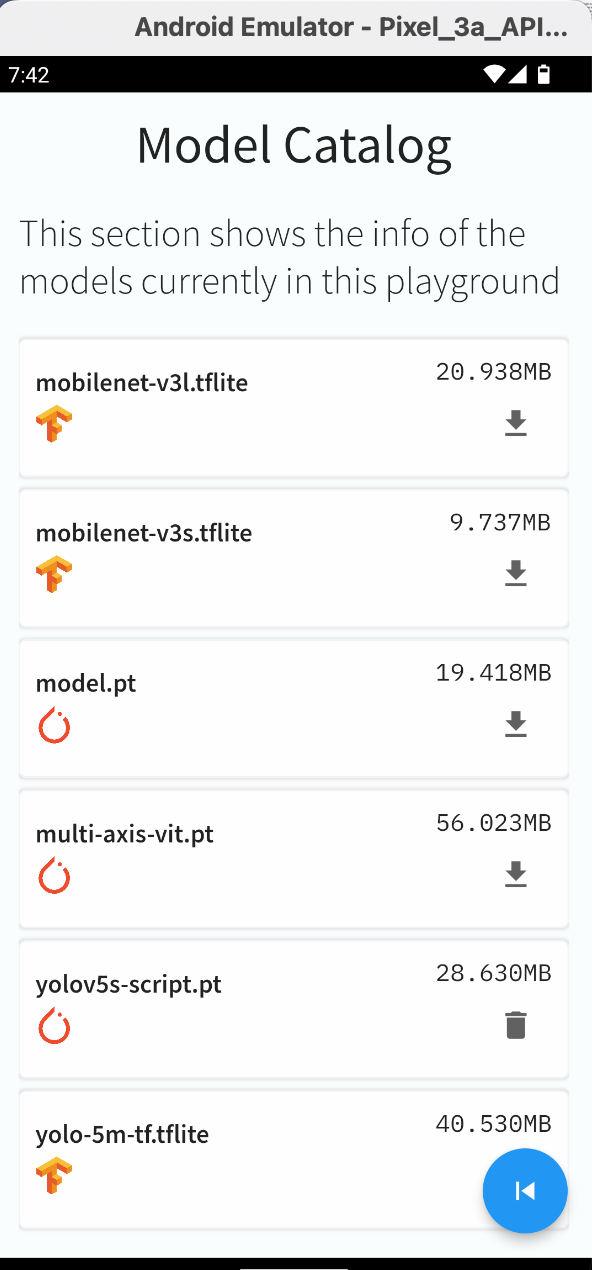

Models access and control

The models are managed in two ways, from the dropdown which also initializes the model instance used in predictions, and in the model catalog, which manages the models registry, so to say. Here, we can download or delete the models as is.

Model catalog

The info of the models is found in therelease version v1.0on the reponeuron-models-tests.Clicking on each of the models' names on the table on the README.md of the repository directs you to the source of each and every model. To contribute to more models that are usable for classification, detection, etc, for the app and ultimately the plugin, clone the repo and then use the codebase for each tag and framework to test for models conversion, quantization, and tracing.

Note:Ensure that the model for PyTorch does not require serialization on torch load.

The info of the models is found in therelease version v1.0on the reponeuron-models-tests.Clicking on each of the models' names on the table on the README.md of the repository directs you to the source of each and every model. To contribute to more models that are usable for classification, detection, etc, for the app and ultimately the plugin, clone the repo and then use the codebase for each tag and framework to test for models conversion, quantization, and tracing.

Note:Ensure that the model for PyTorch does not require serialization on torch load.

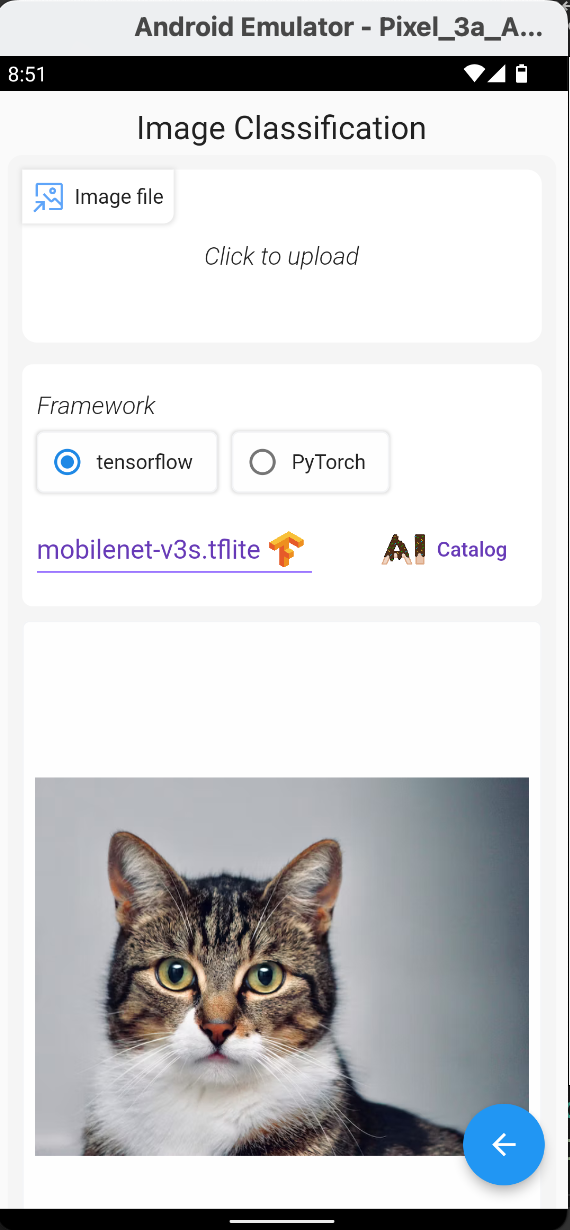

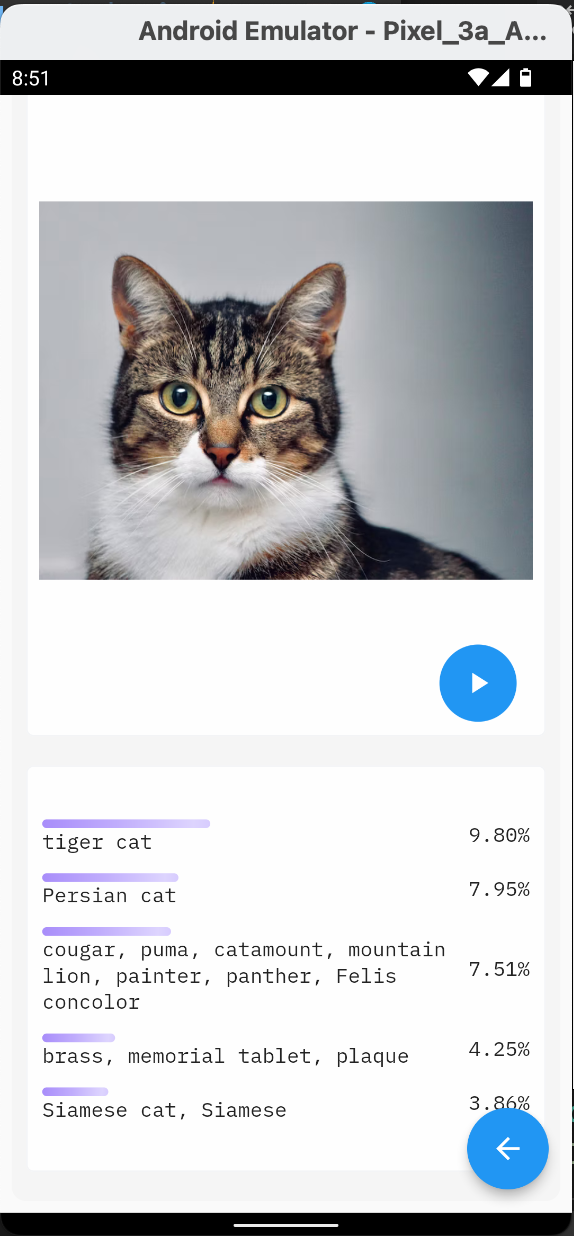

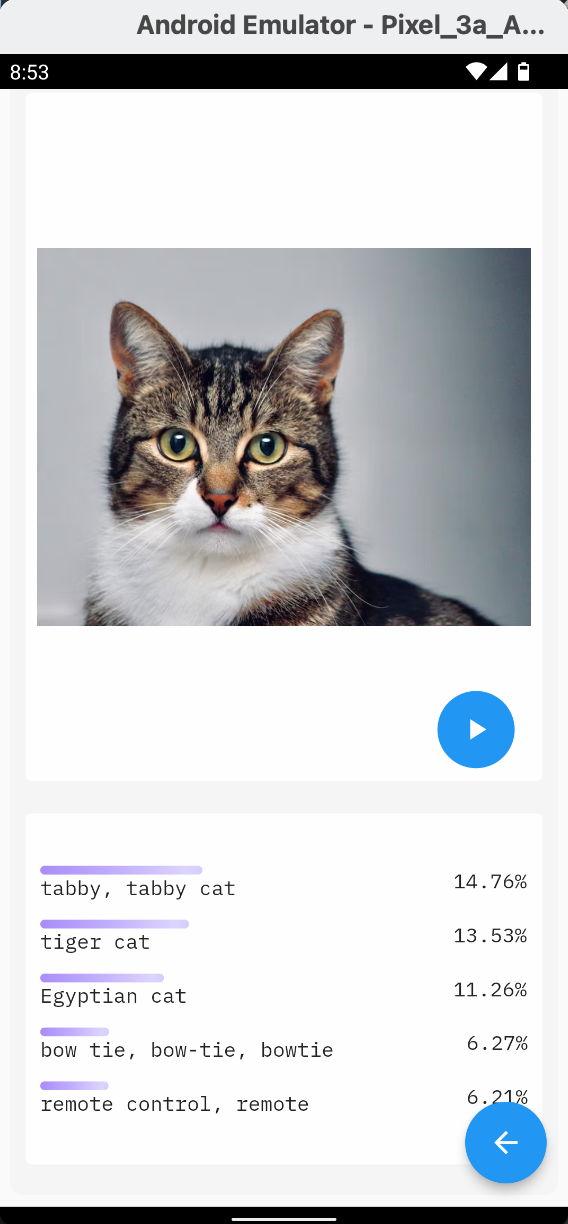

Classification ~ the screenshots below illustrate the explicit selection of both TensorFlow and PyTorch models, picture selection from local storage, and inference.

For TensorFlow, I preview the screenshot formobilenet-v3sfor classification. For PyTorch, I usemodel.ptused in theofficial Android blog for Pytorch, illustrating image classification on a native Java codebase, now rebuilt to work in a Flutter application.

For TensorFlow, I preview the screenshot formobilenet-v3sfor classification. For PyTorch, I usemodel.ptused in theofficial Android blog for Pytorch, illustrating image classification on a native Java codebase, now rebuilt to work in a Flutter application.

Detection ~ detection has been interesting on its own. The derivation of non-maximum suppression for Java was one of them, eventually extracted from the PyTorch object detectiondemo code.

For detection,ultralytics repo and modelswere super essential in generating the tflite version of the YOLO model. The weights used were for yolov5m. This has been for TensorFlow. For PyTorch, the demo earlier stated was where I got the link for the YOLO version of torchscript that did not bug me with serialization issues when loading on mobile.

I did a lot of tests on detection, including trying to export both TensorFlow and PyTorch models using post-processor layer for non-maximum suppression, but the restriction of graphs and the virtue of the function created being Pythonic in nature, failed the compilation process, or in the PyTorch case, the tracing process for creation of TorchScript models.

For detection,ultralytics repo and modelswere super essential in generating the tflite version of the YOLO model. The weights used were for yolov5m. This has been for TensorFlow. For PyTorch, the demo earlier stated was where I got the link for the YOLO version of torchscript that did not bug me with serialization issues when loading on mobile.

I did a lot of tests on detection, including trying to export both TensorFlow and PyTorch models using post-processor layer for non-maximum suppression, but the restriction of graphs and the virtue of the function created being Pythonic in nature, failed the compilation process, or in the PyTorch case, the tracing process for creation of TorchScript models.

Contribution

The whole reason why I am making this app and everything built after it, including the plugin, open-source is for the amazing community out there to improve it to its best version there is.

To contribute to the application in terms of code convention, error handling, UI improvements, optimization in terms of flutter isolates, and code shrinking and treeshaking, among other features

~ clone theGitHub repo

~ runon the root folder of the app

To contribute to the application in terms of code convention, error handling, UI improvements, optimization in terms of flutter isolates, and code shrinking and treeshaking, among other features

~ clone theGitHub repo

~ runon the root folder of the app

siliconSavanna@Fourier-PC

sh

[flutter_native]$ flutter pub get

~ open an emulator, or connect a physical device

~ cd to the android folder from the root

~ runto download the native Java third-party libraries

~ cd to the android folder from the root

~ runto download the native Java third-party libraries

siliconSavanna@Fourier-PC

sh

[flutter_native]$ ./gradlew installDebug

Thank you for reading. Phase two of the project commencing soon!